Keywords helped us find audiences in the 2010s. In 2026, the edge comes from understanding why someone searches and the entities they care about. Semantic SEO analytics translates messy queries into intent, topics, and relationships—so you can ship content that answers needs, not just matches phrases.

Why keywords alone fall short

- Ambiguity: “apple care” could be support, insurance, or repair pricing.

- Surface coverage: Ranking for “pricing” means little if your page doesn’t resolve concerns like discounts, SLAs, or contract terms.

- Fragmentation: Ten posts chasing near-synonyms cannibalize each other and weaken topical authority.

Semantic SEO reframes the problem (similar to how cross-channel analytics connects touchpoints): identify entities (people, products, problems), their attributes (price, specs, availability), and relationships (X compares to Y, X solves problem Z). Then map those to user intent and journey stage.

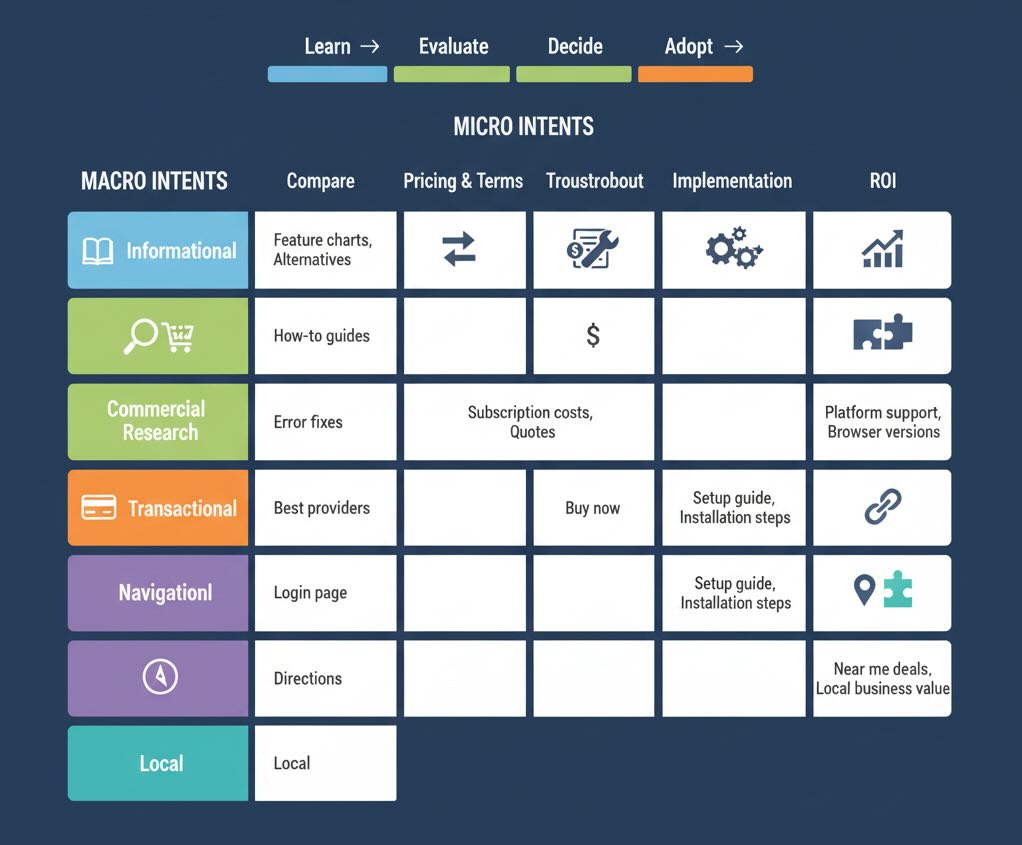

Layers of user intent (macro + micro)

- Macro intents: Informational, commercial research, transactional, navigational, local.

- Micro intents: compare vs. alternatives, troubleshoot, pricing & terms, implementation steps, compatibility, ROI.

Pair each intent with a journey stage (learn → evaluate → decide → adopt). Your analytics should report on performance at the intent level, not just by keyword.

Build your semantic map (knowledge graph lite)

- Collect signals

- Search Console queries, site search logs, chatbot/CRM topics, support tickets, reviews, “People Also Ask,” and competitor headings.

- Extract entities & attributes

- Pull product names, features, problems, industries, integrations, pricing models; note relationships (e.g., Product A integrates with Tool B).

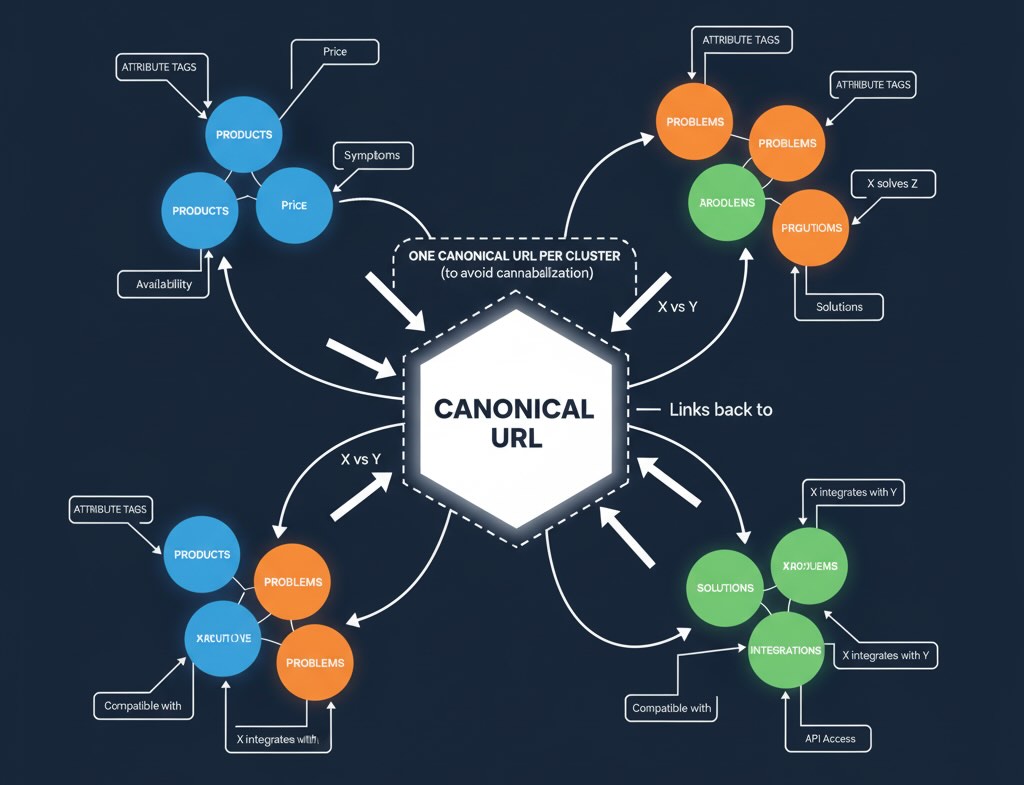

- Cluster by meaning

- Use embeddings or clustering (even simple TF-IDF + cosine works) to group semantically similar queries; label each cluster with intent and stage.

- Choose canonical answers

- One URL per cluster to avoid cannibalization; supporting articles handle sub-tasks and link back.

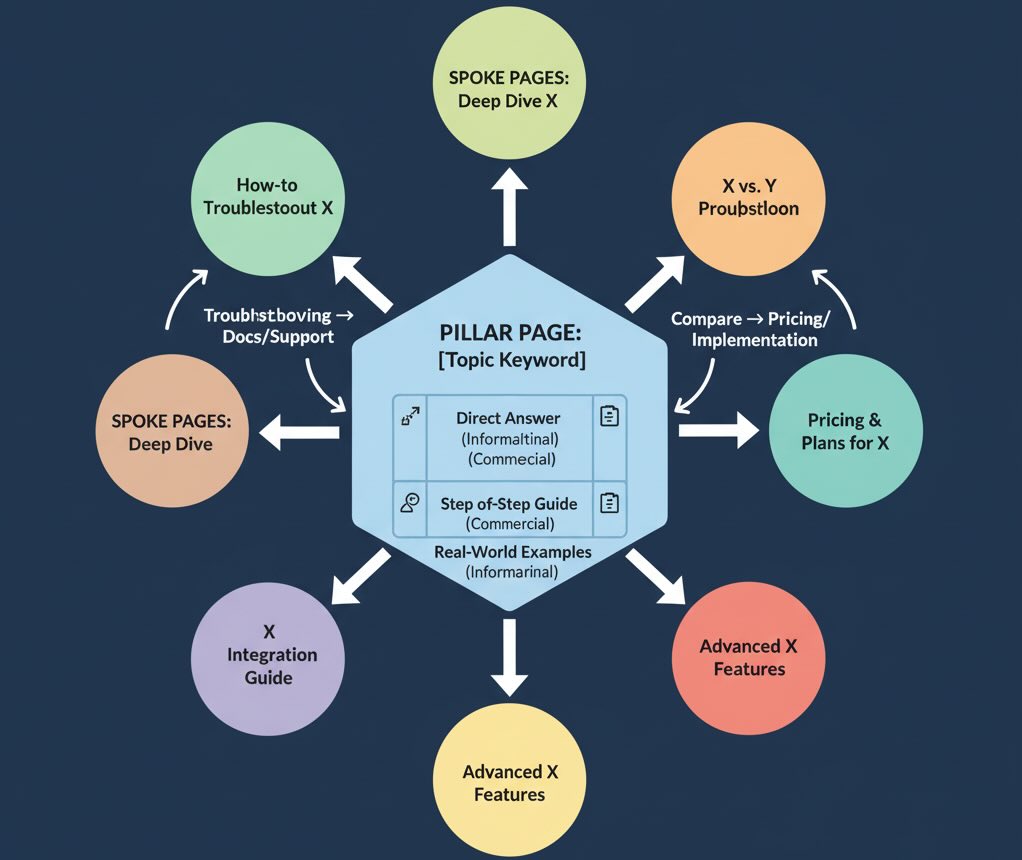

Outcome: a living topic graph: hubs (pillars) like “Payment reconciliation” connected to spokes like “Stripe vs. Adyen reconciliation,” “How to fix payout mismatches,” and “Reconciliation checklist PDF.”

Content architecture that earns intent

- Pillars & spokes: Pillar = comprehensive, evergreen; spokes = deep dives.

- Internal linking: Link by relationship, not just anchor match—comparison pages link to pricing and implementation; troubleshooting links to docs and support.

- Answer patterns: For informational queries, open with a direct answer (40–60 words), then expand. For commercial research, lead with a comparison table and decision criteria before prose.

Structured data that disambiguates

Use schema to help search engines “see” your entities and page purpose:

Article/FAQPagefor answers and PAA visibility.Product,Offer,AggregateRatingfor commerce.HowTofor step sequences.SoftwareApplicationandOrganizationwhere relevant.

Proper markup won’t replace quality, but it validates the relationship between your content and the query’s task.

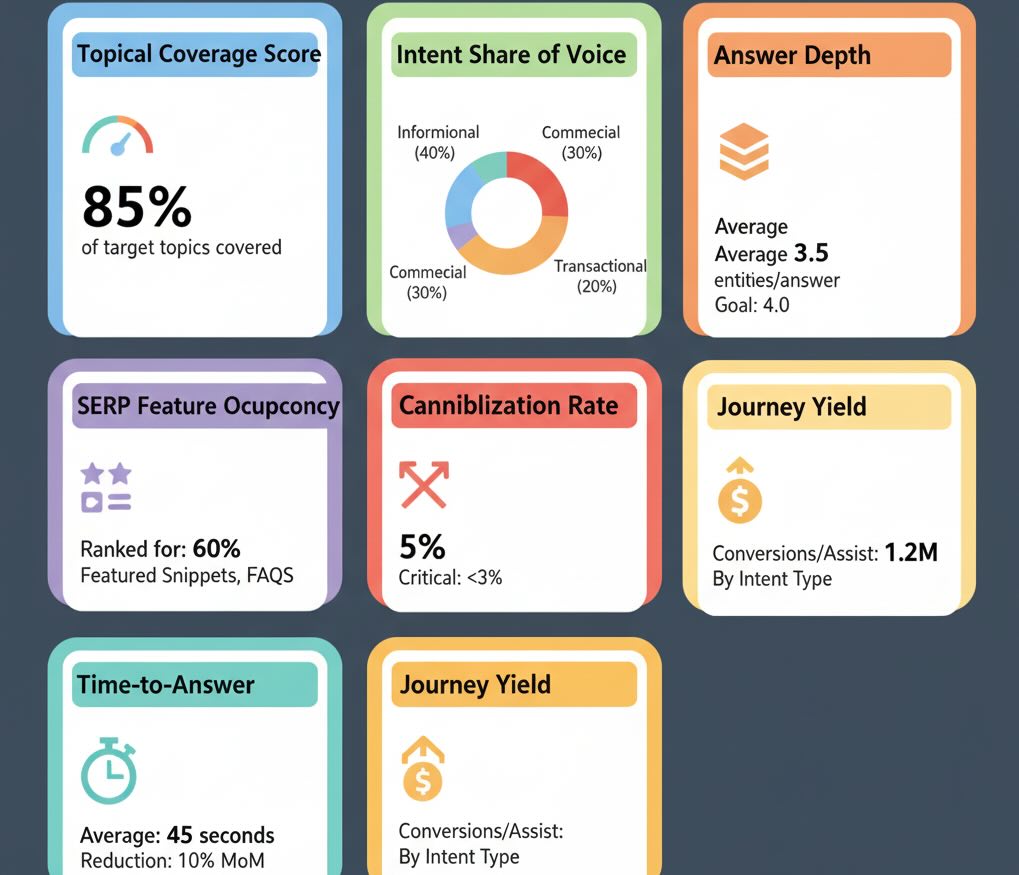

Metrics that matter (beyond rankings)

Measure at the intent cluster level (see also our guide on website metrics that matter):

- Topical Coverage Score: Share of target subtopics answered (e.g., 18/22 pricing questions covered).

- Intent Share of Voice (SOV): Your impressions/clicks vs. competitors for a cluster, not just a head term.

- Answer Depth: % of pages that include the key elements users need (table, steps, examples, pros/cons).

- SERP Feature Occupancy: Presence in PAA, snippets, images, videos, discussions for the cluster.

- Cannibalization Rate: # of URLs competing for the same intent; drive toward one canonical.

- Journey Yield: Conversions, demo starts, or assisted revenue per intent, not per keyword.

- Time-to-Answer: Days from cluster identified → page published → first impression/click; speed compounds.

Practical 7-step workflow

- Harvest queries & conversations (GSC, on-site search, sales notes, support).

- Normalize & cluster into semantic groups; tag each with macro/micro intent and journey stage.

- Gap analysis: Where do users ask but you have no canonical URL or thin coverage?

- Design briefs that specify: entities to include, synonyms, must-answer questions, evidence (data, examples), and SERP features to target.

- Draft for resolution, not fluff: Lead with the answer; add comparisons, steps, visuals, and real examples.

- Add schema & link graph: Mark up the page and connect it within your topic cluster.

- Monitor & iterate: Track intent SOV, feature occupancy, and journey yield; improve depth and internal links where weak.

Example: “Pricing integration” cluster (B2B SaaS)

- User micro-intents: pricing API setup, sync frequency, rate limits, compare to manual export, security & PII.

- Pillar: “Pricing Integration: Methods, Limits, and Best Practices.”

- Spokes: “REST vs. Webhooks for Price Sync,” “Rate Limits Explained with Examples,” “Security Checklist for Pricing APIs,” “Manual vs. Automated: Cost & Risk Analysis.”

- Measurement: Intent SOV for the cluster, % of pages with code snippets & rate tables (answer depth), demo starts attributed to this cluster within 7 days (journey yield).

Common pitfalls to avoid

- Chasing synonyms: 10 look-alike posts dilute authority; consolidate.

- Ignoring micro-intents: Ranking for “pricing” but hiding discounts, terms, or procurement steps.

- Walls of text: SERPs reward clear structures—tables, steps, visuals, FAQs.

- No canonical mapping: Two URLs “answering” the same cluster splits signals.

- Publishing without evidence: Add data points, short case wins, or benchmarks to prove value.

Content elements that boost comprehension

- Decision tables: side-by-side comparisons with “best for” guidance.

- Process visuals: flows for setup, troubleshooting, or migration.

- Checklists: condensed, scannable actions users can save.

- Embedded FAQs: driven by your cluster’s top questions, not generic filler.

Bottom line

Semantic SEO analytics elevates your program from “ranking for words” to resolving tasks. When you model entities and intents, cluster by meaning, and measure outcomes at the intent level, your content becomes easier for algorithms to understand—and more i